NKU Team’s Open-source MDTv2 can Speed up Training of Sora’s Core Component DiT by More Than 10 Times

At the beginning of 2024, OpenAI (an American artificial intelligence (AI) research organization) released Sora, the first AI-enabled text-to-video large-scale model. By simulating the dynamic changes in the real world through computer vision technology, it can generate 60-second smooth and realistic videos. It is regarded as another breakthrough in AI technology after ChatGPT. However, as can be seen from messed-up videos generated by the Sora, AI still has difficulties in “grasping” the physical world quickly and accurately.

Recently, Masked Diffusion Transformer (MDT), an international joint research outcome achieved by a team led by Professor Cheng Mingming of Nankai University and Nankai International Advanced Research Institute (Futian, Shenzhen), has increased the training speed by more than 10 times compared with Sora’s core component Diffusion Transformer (DiT), outperforming SoTA’s (best) image generation quality and learning speed. It achieved an FID (a measure of image quality) score of 1.58 on the ImageNet benchmark (performance testing of large image classification datasets), which outperforms the models put forward by well-known companies such as Meta and Nvidia. The research team has also made open all the MDT source code.

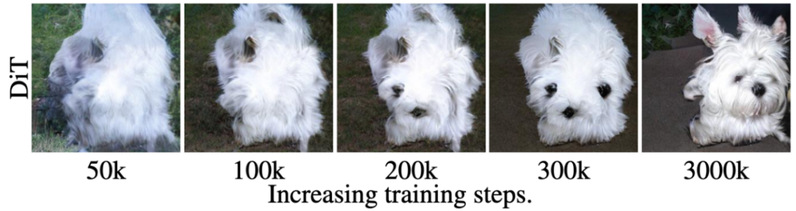

Diffusion models, represented by DiT, which is one of Sora’s core components, cannot efficiently learn the semantic relationships between various parts of objects in the image, which leads to low convergence efficiency in the training process. At the same time, larger models and data scales consume a lot of computing power, causing training costs to skyrocket.

Figure 1: DiT fails to accurately generate images at 300,000th training step

Cheng Mingming, a professor at Nankai University and Nankai International Advanced Research Institute (Futian, Shenzhen); Gao Shanghua, a doctoral student; Zhou Pan, a doctoral student at Donghai Group Sea AI Lab; and Yan Shuicheng, a fellow of the Singapore Academy of Engineering, IEEE/ACM Fellow, and president of Kunlun 2050 Research Institute, jointly proposed a solution. By introducing contextual representation learning in the diffusion training process, it can reconstruct the complete information of incomplete images using the context information on image objects, so as to learn the association between semantic components in the image, and improve the quality of image generation and learning speed. The paper, entitled “Masked Diffusion Transformer is a Strong Image Synthesizer”, was presented on International Conference on Computer Vision (ICCV) 2023 (a top international conference on computer vision).

Figure 2: FID performance of DiT-S/2 baseline, MDT-S/2, and MDTv2-S/2 at different training steps and training times

Recently, the research team upgraded MDT to v2. A more efficient macro network structure was introduced to MDTv2 to optimize the learning process. The training process of the model can be accelerated by adopting better training strategies such as faster Adan optimizer and expanded mask ratio. Experiment results show that a better semantic understanding of the physical world through visual representation learning can improve the effect of the generative model in simulating the physical world.

URL:

https://arxiv.org/abs/2303.14389

(Edited and translated by Nankai News Team.)